- Home

- Protocols

-

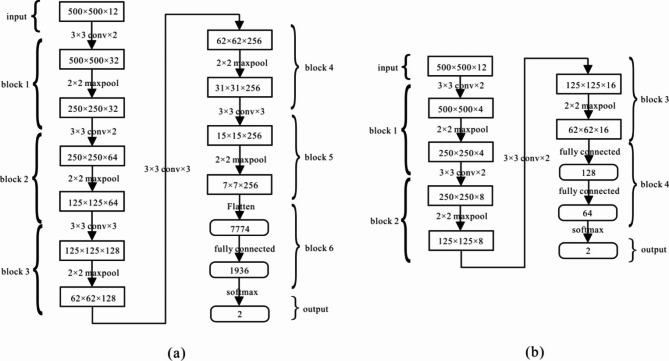

VGG is a CNN proposed by Simonyan and Zisserman in 201447,48, which demonstrates that increasing network depth can affect a network’s final performance to some extent. VGG, which finished second in the 2014 ImageNet Image Classification Contest, has demonstrated its superior performance. VGG 16 consists of 16 hidden layers with three different layers, including the convolution layer and maximum pool layer. VGG 16 replaces the larger convolution cores in AlexNet with successive 3 × 3 convolution cores. For a given receptive field, it is better to use a smaller convolution kernel than a larger convolution kernel; thus, the depth of the network is increased while keeping the receptive field fixed, and the effect of the neural network is thereby enhanced. The execution mode of VGG 16 in the paper is shown in (Fig. 4a).

(a) Schematic diagram of the VGG 16 network architecture. (b) Schematic diagram of VGG 8 network architecture.

The structure of VGG 8 is very simple, and the network uses the same convolutional core size and maximum pool size. However, as VGG increases in depth, it also increases the volume of the parameter space, resulting in a larger model than networks such as AlexNet and LeNet, and it has a longer training period. For this reason, we refer to the previous VGG 8 network structure with eight hidden layers, as shown in (Fig. 4b).

Do you have any questions about this protocol?

Post your question to gather feedback from the community. We will also invite the authors of this article to respond.

Tips for asking effective questions

+ Description

Write a detailed description. Include all information that will help others answer your question including experimental processes, conditions, and relevant images.